Linear Regression

Linear regression assumes that features and outcomes satisfy a linear relationship. The expressive ability of linear relationship is strong. The influence of each feature on the result can be reflected by its parameter.

And each feature variable can be mapped to a function first, and then participate in the linear calculation. In this way, a nonlinear relationship between features and results can be expressed.

The linear model can be expressed as \(f(X)=W^{T}X+b\)

\(b\) (bias parameter): compensates for the difference between the mean of the target values and the weighted mean of the basis function values

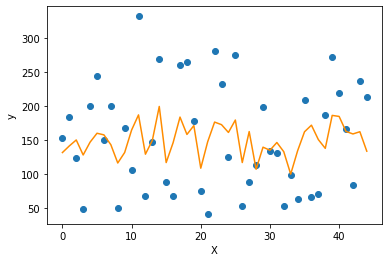

The goal is that the predicted value from model can be infinitely close to the true value: \(f(x_{i}) \approx y_{i}\)

The reason for we want infinitely close but not exactly the same is that we can only learn part of the data sampled from all the data of a certain type of event, the sampled data cannot cover all the possibilities of the event, so we can only learn the overall law in the end.

1 | |

Here we use MSE to measure the difference between \(\hat{y}\) and \(y\): \(\mathrm{MSE}=\frac{1}{n} \sum_{i=1}^{n}\left(f(x_{i})-y_{i}\right)^{2}=\frac{1}{n} \sum_{i=1}^{n}\left(\hat{y}_{i}-y_{i}\right)^{2}\)

The difference can be positive or negative so using square can erase the effect of positive and negative. After the square is used, the error out of -1 and 1 will be enlarged, and the error between -1 and 1 will be reduced at the same time. And it's unable to handle when measures vary widely across dimensions, so we have to normalize the data before modeling.

Derivation Process of Parameter Estimation in Linear Regression Model:

\(\left(w^{*}, b^{*}\right) =\underset{(w, b)}{\arg \min } \sum_{i=1}^{m}\left(f\left(x_{i}\right)-y_{i}\right)^{2} =\underset{(w, b)}{\arg \min } \sum_{i=1}^{m}\left(y_{i}-w x_{i}-b\right)^{2}\)

\(\frac{\partial E_{(w, b)}}{\partial w}=2\left(w \sum_{i=1}^{m} x_{i}^{2}-\sum_{i=1}^{m}\left(y_{i}-b\right) x_{i}\right), \quad\) \(\frac{\partial E_{(w, b)}}{\partial b}=2\left(m b-\sum_{i=1}^{m}\left(y_{i}-w x_{i}\right)\right)\)

\(w=\frac{\sum_{i=1}^{m} y_{i}\left(x_{i}-\bar{x}\right)}{\sum_{i=1}^{m} x_{i}^{2}-\frac{1}{m}\left(\sum_{i=1}^{m} x_{i}\right)^{2}}, \quad\) \(b=\frac{1}{m} \sum_{i=1}^{m}\left(y_{i}-w x_{i}\right)\)

In matrix:

\(w^{\prime} = 2X^{T}(Y-Xw) = 2X^{T}Y-2X^{T}Xw = 0\)

\(2X^{T}Y = 2X^{T}Xw \rightarrow \hat{w} = (X^{T}X)^{-1}X^{T}Y\)

\(X^{T}X\) usually isn't a full-rank matrix in reality so we need to apply regularization on it

\(\hat{w} = (X^{T}X+ \lambda I)^{-1}X^{T}Y\)

1 | |

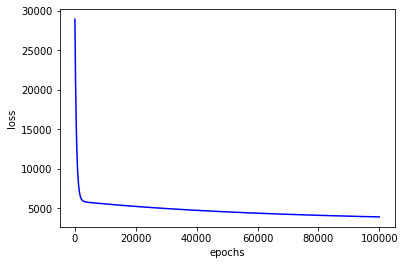

Update parameters based on gradient descent: multiple iterations are required to converge to the global minimum and a proper learning rate is necessary, but under this method, \((X^{T}X)^{-1}\) isn't required to be calculated.

1 | |

1 | |

1 | |

1 | |

1 | |

All articles in this blog adopt the CC BY-SA 4.0 agreement except for special statements. Please indicate the source for reprinting!